It simply has to be as a mature ecosystem. Java isn't as complex an ecosystem as it is due to any failings or language failures. One thing that was interesting was watching the Ruby on Rails stack explode in complexity to encompass an acronym soup nearly as bad a Java as the years moved forward and it matured.

Go is a syntax power stepback, with possibly some GC advantages, and Javascript even with Typescript is still a messed up ecosystem with worse GC and performance issues. Syntax arguments are bikeshedding, but it was a "step forward" for non-systems coding from C and has fundamental design, architectural, breadth of library, interop, modernization, and familiarity advantages over COBOL. Java is a c-like syntax that runs everywhere people are shoehorning in Go and Node.JS. idiocy/ignorance.ĬOBOL is exotic syntax and runs on fringe/exotic hardware (mainframes, minicomps, IBM iron). "Java is the new COBOL" has always been either a glaring sign of idiocy/ignorance or a bad joke signifying. Honest questions above, interested in other thoughts. Has the cloud and/or Kubernetes completely replaced the described style of architecture at this point? Hadoop's Map-Reduce might be dated, but HDFS is still being used very successfully in big data centers. But I think it (and Google's original map-reduce paper) significantly moved the needle in terms of architecture. But what exactly would replace the HDFS, Spark, Yarn configuration described by the article? Are there equivalents of this stack in other non-JVM deployments, or to other big data projects, like Storm, Hive, Flink, Cassandra?Īnd granted, Hadoop is somewhat "old" at this point. I know that competition to this architecture must exist in other frameworks or platforms. The "big data" architecture that Java and the JVM ecosystem present is really something to be admired, and it can definitely move big data. This is a very complicated and sophisticated architecture that leverages the JVM to the hilt. I'm not trying to flame bait here, but this whole article refutes the "Java is Dead" sentiment that seems to float around regularly among developers. I now work in a more serious giant corporation where dumb dbs are the default and it's way more comfortable than I was led to believe, when I was younger. Usually the cry is "why the hell dont we have a dumb db rather than this magic dynamic crap". I've seen myself, anecdotically, that most of the time with json data, the past is ignored during the ramp up to scale and then once the product is alive and popular, problems start arising. It's never perfect, but the belief data dont need structure nor a well automated history of migration, is dangerous.

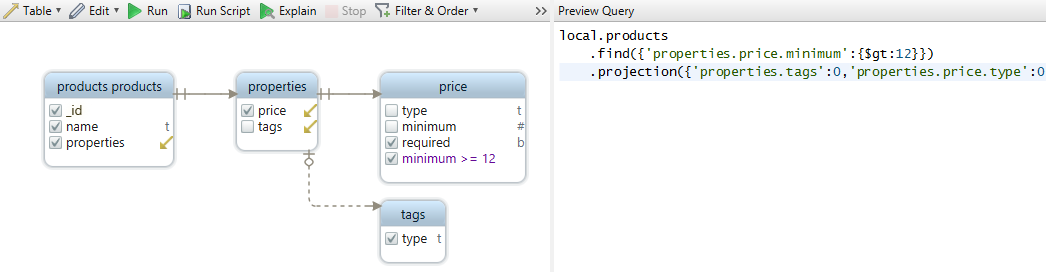

DBSCHEMA MONGODB DESIGN CODE

In contrast, the elements I mentioned previously, Phase 1, were responsible for a 169x(!) improvement in storage density.īut you dont care as much about rollback (we rarely rollack successful migration and only on prod issues the next few days, and always prepare for it with a reverse script) as you care about the past (can you read data from 2 years ago? this can matter a lot more, and you must know it's never done on unstructured data: the code evolve with the new shape, week after week and you re cucked when it's the old consumer code you need to unearth to understand the past). The columnar storage of data and dictionary deduplication, what is called Phase 2 in the article, is still not fully implemented according to the article authors and is only expected to result in a 2x improvement.

The only other thing that may cause a non-trivial improvement is that they delta encode their timestamps instead of writing out what looks to be a 23 character timestamp string. That is basically the source of their entire improvement. This general strategy can be extended to any number of logging operations by just giving them all a unique ID.

Then, if logging operation 1 occurs we can write out:ġ, ” because we can reconstruct the message as long as we know what operation occurred, 1, and the value of all the variables in the message. We can label the first one logging operation 1 and the second one logging operation 2. The gettersĪre useful for formatting or combining fields, while setters are useful forĭe-composing a single value into multiple values for storage.If you have exactly two logging operations in your entire program: You can get and set but that do not get persisted to MongoDB. Title: String, // String is shorthand for ) Ĭonst Animal = mongoose. Each schema maps to a MongoDBĬollection and defines the shape of the documents within that collection. If you are migrating from 5.x to 6.x please take a moment to read the migration guide.Įverything in Mongoose starts with a Schema. If you haven't yet done so, please take a minute to read the quickstart to get an idea of how Mongoose works.

0 kommentar(er)

0 kommentar(er)